How many generations of computers are there?

Computer generations are based on when major technological changes in computers occurred, like going from vacuum tubes to transistors. As of 2024, there are five generations of the computer.

Review each of the generations below for more information and examples of computers and technology that fall into each generation.

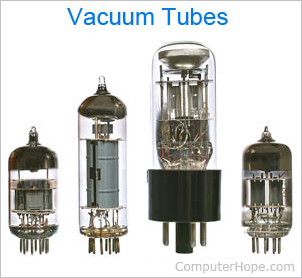

First generation (1940 - 1956)

The first generation of computers used vacuum tubes as a major piece of technology. Vacuum tubes were widely used in computers from 1940 through 1956. Vacuum tubes were larger components and resulted in first-generation computers being quite large in size, taking up a lot of space in a room. Some first-generation computers took up an entire room.

The ENIAC (Electronic Numerical Integrator and Computer) is a great example of a first-generation computer. It consisted of nearly 20,000 vacuum tubes, 10,000 capacitors, and 70,000 resistors. It weighed over 30 tons and took up a lot of space, requiring a large room to house it. Other examples of first-generation computers include the EDSAC (Electronic Delay Storage Automatic Calculator), IBM 701, and Manchester Mark 1.

Second generation (1956 - 1963)

The second generation of computers used transistors instead of vacuum tubes. Transistors were widely used in computers from 1956 to 1963. Transistors were smaller than vacuum tubes and allowed computers to be smaller in size, faster in speed, and cheaper to build.

The first computer to use transistors was the TX-0 and was introduced in 1956. Other computers that used transistors include the IBM 7070, Philco Transac S-1000, and RCA 501.

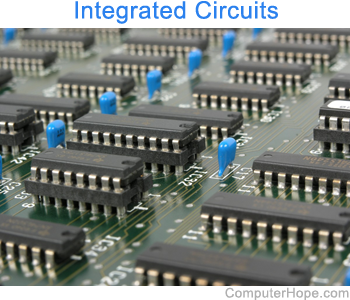

Third generation (1964 - 1971)

The third generation of computers introduced used IC (Integrated Circuit) in computers. Using IC's in computers helped reduce the size of computers even more than second-generation computers, and also made them faster.

Nearly all computers since the mid to late 1960s have utilized IC's. While the third generation is considered by many people to have spanned from 1964 to 1971, IC's are still used in computers today. Over 45 years later, today's computers have deep roots going back to the third generation.

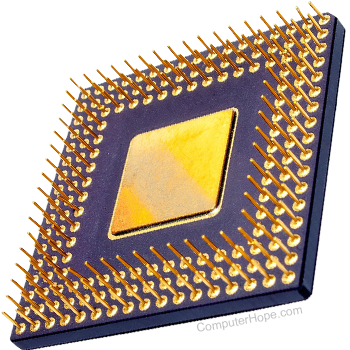

Fourth generation (1971 - 2010)

The fourth generation of computers took advantage of the invention of the microprocessor, commonly known as a CPU (Central Processing Unit). Microprocessors, with integrated circuits, helped make it possible for computers to fit easily on a desk and for the introduction of the laptop.

Early computers to use a microprocessor include the Altair 8800, IBM 5100, and Micral. Today's computers still use a microprocessor, despite the fourth generation being considered to have ended in 2010.

Fifth generation (2010 to present)

The fifth generation of computers is beginning to use AI (Artificial Intelligence), an exciting technology with many potential applications around the world. Leaps have been made in AI technology and computers, but there is still room for much improvement.

One of the more well-known examples of AI in computers is IBM's Watson, which was featured on the TV show Jeopardy as a contestant. Other more recent examples include ChatGPT and the introduction of and AI PCs.

Sixth generation (future generations)

As of 2024, most still consider us to be in the fifth generation as AI continues to develop. One possible contender for a future sixth generation is the quantum computer. However, until quantum computing becomes more developed and widely used, it is still only a promising idea.

Some people also consider nanotechnology to be part of the sixth generation. Like quantum computing, nanotechnology is largely still in its infancy and requires more development before becoming widely used.

With a new generation of computers it's also possible how we interact with a computer may also change. New ways of how we may interact with the next generation of computers include only using our voice, AR (Augmented Reality), VR (Virtual Reality), or MR (Mixed Reality).